- India

- International

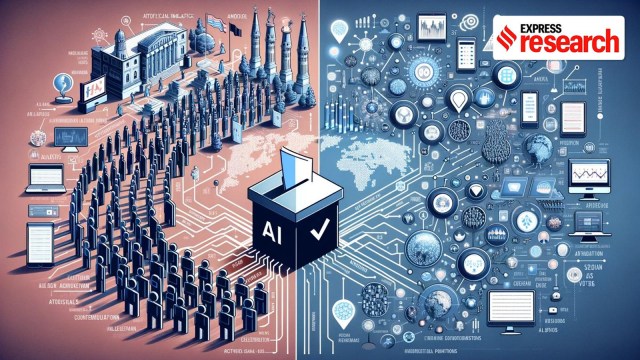

How big is the threat of artificial intelligence over elections

As we approach high-stakes elections in over 50 countries the role of AI in shaping voter education and influencing political discourse remains a topic of intense discussion. With the ability to create deepfakes and personalised messages, AI presents both opportunities and risks, challenging governments and organisations to adapt to this rapidly changing technological landscape.

in 2024, AI is poised to significantly influence elections (Generated using DALL-E)

in 2024, AI is poised to significantly influence elections (Generated using DALL-E)A video of jailed former Pakistani prime minister Imran Khan claiming victory for his allies in the general elections on February 8th got the world’s attention. The reason being, Khan’s party used artificial intelligence to replicate his voice.

According to Jibran Ilyas, a Chicago-based campaigner, who works for the social media division of Khan’s Pakistan Tehreek-e-Insaf (PTI) party, the initiative helped deliver a message of hope. Speaking with Al Jazeera, Ilyas said Khan remained a crucial figure within the party, and his image could be used to inspire his followers.

The use of AI by the PTI is one example of how the technology can be deployed to revolutionise electoral politics. However, AI-influenced elections remain an unknown entity; one that presents its own opportunities and risks.

2024 will see high-stakes elections in over 50 countries, including India, the US, the UK, Indonesia, Russia, Taiwan, and South Africa. Like in previous elections, one of the biggest challenges voters will face will be the prevalence of fake news, especially as AI technology makes it easier to create and disseminate.

The World Economic Forum 2024 Global Risk report ranked AI-derived misinformation and its potential for societal polarisation as one of its top 10 risks over the next two years. Even industry insiders like OpenAI founder Sam Altman and former CEO of Google, Eric Schmidt are wary of the technology’s implications, with the latter warning in an article for the Foreign Policy Magazine that “the 2024 elections are going to be a mess because social media is not protecting us from false generative AI.”

This rapidly changing technological landscape has sparked fierce discussions amongst people who fear AI could open the floodgates of misinformation and those who believe it can be used to transform voter education.

How can AI disrupt elections?

While elections take place within local contexts, the information influencing voter decisions increasingly comes from digital platforms like Facebook, Google, Instagram, and WhatsApp. According to a November 2023 survey by marketing research firm Ipsos, 87 per cent of respondents in 16 countries registered disinformation on social media as one of the biggest factors influencing elections.

As Katie Harbath, the founder of election consultancy Anchor Change, told indianexpress.com, social media has been around for over a decade but globally very few governments have adapted to regulating it. Now, with AI added to the mix, the challenge is even more pertinent. People with very little technical expertise are capable of using generative AI tools to disseminate fake text, images, videos, and audio across a large digital base in multiple different languages, she said.

In addition to spreading propaganda using a small data set, AI is capable of creating deepfakes and generating voice-cloned audio, presenting significant challenges for governments and organisations across the world. According to a report published by the Brookings Institute, the four main threats posed by AI are: increased quantity of misinformation, increased quality of misinformation, increased personalisation of misinformation, and involuntary proliferation of fake but plausible information.

In an interview with the Economist, historian Yuval Noah Harari says we might soon find ourselves conversing about important and polarising topics with entities that we believe are human but are actually AI. Through its mastery of language, AI can form intimate relationships with people, using that intimacy to personalise messages and influence worldviews. “The catch is,” Harari said, “it is utterly pointless for us to spend time trying to change the declared opinions of an AI bot, while the AI could hone its messages so precisely that it stands a good chance of influencing us.”

This personalisation could also make it harder for individuals to distinguish between real and fake news. As Georgetown University professor Josh Goldstein and AI researcher Girish Sastry note in an article for Foreign Affairs Magazine, a recent project showed that an AI agent ranked in the top 10 participants of the board game Diplomacy, in which players negotiate with each other to form alliances. They argue that “if today’s language models can be trained to persuade players to partner in a game, future models may be able to persuade people to take actions — joining a Facebook group, signing a petition, or even showing up to a protest.”

Unknowingly then, content generated by machines could reach the masses through human proliferation. Teenagers in particular are significantly more likely to buy into fake information, underscoring Generation Z’s broad relationship with social media.

A report by the Center for Countering Digital Hate, a British non-profit, found that 60 per cent of teenagers in America agree with four or more harmful conspiracy statements compared to 49 per cent of adults.

According to Harbath, all of this has a cascading effect, creating an overall ecosystem of distrust. “The narrative that AI is going to destroy the information environment alone can make people lose trust in the electoral process,” she states. This, in turn, fuels the phenomenon popularly referred to as the ‘Liar’s Dividend’ in which actors use the proliferation of fake news to sow distrust in the legitimacy of all news, no matter how accurate.

An example of this is the statements of two defendants on trial in the January 6, 2021 Capitol riots case. The two men claimed that the video showing them at the Capitol could have been generated using AI. Both were, however, found guilty.

Misinformation — A ‘moral panic’

While several historians and political scientists are wary of the perils of AI, many others also believe that the threat is overblown. In a report titled Misinformation on Misinformation, University of Zurich fellow Sacha Altay, citing extensive scientific literature, argues that unreliable news constitutes a small portion of people’s information diet and that most people don’t share fake news. As a result of these factors, Altay writes that “alarmist narratives about misinformation should be understood as a moral panic,” the likes of which repeat themselves cyclically.

So far, the evidence seems to support that claim. Approximately 49 national elections have taken place since the launch of Stable Diffusion in August 2022, a free AI software that can create realistic images using textual prompts. Elections in over 30 countries have been held since the launch of ChatGPT in November 2022. So far, while AI has played a role in those elections, it has not definitively shifted the narrative in a single instance.

Echoing that sentiment in an article for The Economist, Dartmouth University professor Brendan Nyhan points out that despite the widespread availability of AI, “we still have not one convincing case of a deepfake making any difference whatsoever in politics.” Earlier, in a controversial interview with NBC, Nyhan claimed people were exposed to far more fake news from Donald Trump than from any social media site.

According to Harbath, AI can be used to positively impact elections, by helping to generate campaign content and creating microtargeting messaging. Examples of movements and candidates that have leveraged AI and social media to reach a larger audience than otherwise considered possible include the Black Lives Matter movement, and the Congressional campaign of Alexandria Ocasio-Cortez.

According to Harbath, in the future, AI can enable these campaigns to “draft more compelling speeches, press releases, social media posts, and other materials.” This in turn, she states, would level the playing field and allow for a more diverse expression of opinions.

As for AI being used to educate voters, the possibilities are also promising. In an article titled Preparing for Generative AI in the 2024 Election, a team from the University of Chicago and Stanford University, argues that “distilling policies and presenting them in an accessible format is a capability of large language models that could enhance voter learning.” For instance, an individual could use a language model to summarise a legislative bill or court judgement. The ability of these models to tailor information based on user knowledge can then provide citizens with the opportunity to engage with complicated content in a simple and accessible way. Similarly, voters can use AI language models to write to lawmakers, transforming a once intimidating process into one that can be deployed effectively by people with limited communication skills.

What has happened in the past elections?

We are still in the nascent stages of AI application. Studies published even 12 months ago could be deemed irrelevant by the rapidly increasing capacities of the technology. However, based on the past few elections, some preliminary conclusions can be drawn.

In 2023, an image of Donald Trump falling down a set of stairs was created by Eliot Higgins, the founder of the open-source investigative outlet Bellingcat. While Higgins said he intended for maybe five people to retweet the image, instead it was viewed nearly five million times.

This AI generated image of Trump falling down stairs while being arrested was viewed nearly 5 million times (Eliot Higgens on X)

This AI generated image of Trump falling down stairs while being arrested was viewed nearly 5 million times (Eliot Higgens on X)

In the final weeks of the Argentinian Presidential campaign last year, Javier Milei published an image of his rival in communist attire, his hand raised aloft in salute. The image was viewed by over three million people on social media, leaving Brookings fellow Darrel West to lament “the troubling signs of AI use” in the Argentine election.

China and Russia have also allegedly used AI to influence foreign elections, particularly in Taiwan. In the weeks leading up to Taiwan’s January 2024 elections, a 300-page e-book containing false sexual allegations about candidate Tsai Ing-wen began circulating on social media. Tim Niven, the lead researcher at DoubleThink Lab, a Taiwanese organisation that monitors Chinese influence operations, was initially surprised that someone would bother to publish a fake book in the age of social media condensed reporting. However, Niven and his team soon began to see videos on Instagram, YouTube, TikTok and other platforms featuring avatars generated using AI, reading out different parts of the book.

According to Niven, the book had become “a script for generative AI videos” and may itself have been a product of the same technology. His subsequent investigation led him to believe “with very high confidence” that the campaign was the handiwork of the Chinese Communist Party. Ing-wen, the anti-Beijing candidate, ended up winning the election, but for the first time since 2000, without achieving a majority in the legislature.

In a report for the Foreign Policy Magazine, journalist Rishi Iyengar said that China’s actions in Taiwan may prove to be a barometer for its strategy on a global level. Quoting Adam King of the International Republican Institute, a nonprofit organisation, he wrote, “if you look back to the pandemic in 2020, the Taiwanese were the ones sounding the alarm way before the rest of the world woke up, and I think it’s the same for disinformation.”

Harbath echoes King’s concerns but also sees Taiwan as a positive case study, citing the strong network of fact-checking the island nation has put in place following decades of Chinese misinformation campaigns.

AI also featured in Bangladesh elections in January this year. According to an investigative report published by the Financial Times, pro-government news outlets and influencers in Bangladesh promoted disinformation using AI at a meagre cost of USD 24 per month. While the incumbent Sheikh Hasina won, likely independent of any AI influence, the bigger concern was how the misinformation was reported by the media. The FT report found that news agencies like the Australian Institute of International Affairs, the Bangkok Post, and the LSE blog all published stories citing fake reports attributed to fake authors affiliated with the likes of Jawaharlal Nehru University and the National Institute of Singapore.

Perhaps most significant was the influence of AI in the September 2023 Slovakian elections. As is the standard in many countries, Slovakia imposes a moratorium on public announcements by politicians and media outlets in the 48-hours leading up to polls closing. During that time frame an AI-manipulated audio recording began to circulate in which the leader of the liberal Progressive Slovakia party discussed how to rig the election. Despite Slovakian publications later denouncing the audio as fake, the fact that it was released during the news blackout period limited the media’s capacity to debunk the story. Although it is difficult to ascertain the impact the viral clip had on voters, it is notable that the Progressive Slovakia party went from trailing slightly in the polls to finishing a distant second.

The Council on Foreign Relations, Brookings Institute, World Economic Forum and a number of other prominent think tanks have all alluded to the Slovakian example in highlighting the risk of AI on elections, which in turn, raises questions for the world’s most populous country and largest democracy, India.

AI and Indian elections

In India, where a considerable fraction of the electorate gets their information from social media or messaging apps, AI is a potent and affordable tool. According to a political consultant who spoke on the condition of anonymity, content generated by language models such as ChatGPT are often used to construct messages shared across various WhatsApp groups. For INR 6,000 a month, he says, a party can hire a WhatsApp administrator who controls a group of 200/300 people. Using AI-generated text, that administrator or influencer can then share content amongst a micro-targeted group, usually in rural areas. Most of the messages target youth voters and employ propaganda against rival candidates. In some cases, photos and videos of those candidates engaged in illicit activities have also been released, he says.

Harbath, who worked for X (earlier Twitter) in India during the last Lok Sabha election, notes that most social media platforms imposed strict guardrails against fraudulent news. Stephen Stedman of Stanford University, in a 2022 interview with Reuters, said that Facebook alone deployed 800 people to prevent misinformation leading up to the 2019 Lok Sabha elections.

However, that has already begun to change. Last year, companies like Meta and X, in a bid to cut costs, reduced the size of their teams working on internet trust and safety.

According to a media lawyer based in Mumbai, formerly tasked with moderating content on Twitter, since Elon Musk’s takeover, the platform has implemented massive cuts that have “increased the amount of fake news on the platform.” While OpenAI and Meta have announced their intention to curb AI-generated political news, the lawyer said that in India, that alone is not enough.

Moreover, for closed social media platforms like WhatsApp, the content moderation guardrails are different from the likes of Instagram, and in most cases, much harder to implement. Therefore, if a deepfake video was to be released and subsequently deleted from social media platforms, by the time that action is taken, the damage might already be done, and the content already widely disseminated across messaging apps.

In India, AI is also being used by politicians to reach out to more people, especially in rural areas. For example, a real-time AI-powered tool was used to translate Prime Minister Narendra Modi’s speech from Hindi to Tamil during an event in Uttar Pradesh in December 2023.

Jaspreet Bindra, the founder of the Tech Whisperer, an advisory firm, told indianexpress.com that for the Indian electorate, personalising messages could be particularly useful. “Personalising messages at scale but for small segments, minor sub-segments, like parts of the constituency or villages or the Panchayat could be exceedingly effective for an electorate which is very diverse and with literacy levels that are not particularly very high. And so it is much more useful in India I believe than in many other places,” Bindra says.

As with any new technology, there is a question of how AI can be used and misused. Many respond to the prospect with alarm, while others see its potential as a positive force. Harbath admits that there are still too many unknowns to contend with. Her advice — panic responsibly.

Apr 05: Latest News

- 01

- 02

- 03

- 04

- 05